Big Tech on Trial: Inside the Massive California Lawsuit Fighting for the Safety of Our Children

For years, parents in Brooklyn, Newark, and across the country have watched their children drift deeper into the glow of smartphones—often emerging more anxious, withdrawn, or changed in ways that are hard to name. That quiet fear is now turning into coordinated legal action. More than 1,000 families have filed a sweeping social media addiction lawsuit in California, accusing the largest tech platforms of knowingly endangering children for profit.

The lawsuit targets some of the most powerful companies in the digital economy—YouTube, Snapchat, TikTok, and Meta—arguing their products were deliberately engineered to addict young users while ignoring mounting evidence of mental health harm, grooming, and exploitation.

Designed to Hook: What Families Say Big Tech Knew

At the center of the case is a core allegation: these platforms were not neutral tools that happened to cause harm, but systems intentionally designed to maximize time-on-screen—especially for minors. Plaintiffs argue that internal research, safety warnings, and whistleblower concerns were sidelined in favor of growth metrics and ad revenue.

Families describe patterns that feel familiar to many parents: compulsive scrolling, emotional volatility, sleep disruption, and social withdrawal. In more severe cases, the lawsuit links platform use to anxiety disorders, depression, self-harm, and suicidal ideation.

Where facts are still emerging through discovery, the claim is consistent—addiction was not a side effect, it was the business model.

The Meta Defense: Tobacco Lawyers and Sex Bot Allegations

Meta faces some of the sharpest scrutiny in the case. According to plaintiffs, the company retained legal counsel with experience defending tobacco companies—fueling comparisons between cigarette addiction and algorithm-driven engagement.

More troubling are allegations that Meta’s own AI systems helped direct sexualized or predatory accounts—often described as “sex bots”—toward minor users. Whistleblowers cited in filings claim internal safeguards failed repeatedly, allowing inappropriate content and interactions to persist even after being flagged.

Meta has denied wrongdoing, but the lawsuit argues the company repeatedly chose expansion over protection, even as risks to children became harder to ignore.

YouTube and the Grooming Crisis Hidden in Plain Sight

Often treated as an educational default in schools, YouTube occupies a unique role in children’s daily lives. The lawsuit alleges that internal research revealed alarming levels of harm and compulsive use among minors—research plaintiffs say was suppressed or delayed from public release.

Key allegations involving YouTube include:

- Predatory grooming: Bad actors allegedly use comment sections and messaging pathways to contact minors and solicit exploitative material.

- Sexual exposure: Claims reference internal findings that a significant share of minors reported sexual encounters initiated through platform contact. Source Needed

- Unsafe search pathways: Plaintiffs argue that common search terms can surface explicit content that children later reenact or share.

- Addiction by design: Notifications, autoplay, and recommendation loops are cited as intentional mechanisms to keep children engaged regardless of harm.

For families whose schools rely on YouTube as a learning tool, the lawsuit raises difficult questions about digital supervision in classrooms.

Snapchat’s Quiet Exit: What the Confidential Settlement Signals

Snapchat has already reached a confidential settlement in part of this litigation, removing itself from ongoing public proceedings. While details remain sealed, filings outline several core concerns:

- Snapstreak pressure: Daily engagement mechanics that encourage anxiety-driven use.

- “My AI” risks: Allegations that the chatbot provided inappropriate advice to underage users about drugs and sex.

- Drug access: Claims that location-based features and “Quick Add” tools facilitated contact with fentanyl dealers, linked to overdose deaths.

The settlement itself may never be public—but its existence underscores the seriousness of the allegations.

TikTok’s Legal Pressure Campaign: Settlements, Spin, and Silence

TikTok has publicly positioned itself as a safer alternative to legacy social media companies, but it remains deeply entangled in youth harm litigation across the U.S. While TikTok has not announced a single global settlement covering all addiction-related lawsuits, it has resolved or moved to quietly close specific cases and claims in multiple jurisdictions over the past several years, including high-profile privacy and youth-related actions.

Plaintiffs in the current California litigation argue that TikTok’s strategy mirrors a familiar playbook: limit discovery, resolve individual cases before precedent is set, and maintain public ambiguity. Critics say this approach allows the platform to avoid full transparency about internal research on youth addiction, algorithmic amplification, and mental health impacts—issues that continue to draw bipartisan concern from lawmakers and child safety advocates.

Editor’s note: The full scope of TikTok’s settlements related specifically to youth addiction claims remains fragmented across jurisdictions.

The Global Double Standard: How China Restricts the Platforms the U.S. Lets Run Wild

One of the most striking contrasts raised by parents and advocates involves how social media operates outside the United States—particularly in China. While American children have near-unrestricted access to algorithm-driven platforms, China enforces strict controls on youth social media use through regulation, time limits, and content moderation.

In China, youth-facing versions of social platforms operate under government-mandated rules that limit daily screen time, restrict late-night usage, and aggressively filter content deemed harmful to minors. Recommendation algorithms are constrained, and educational or state-approved content is prioritized for younger users. The result is a tightly controlled digital environment—one that critics argue suppresses expression, but supporters say clearly prioritizes child welfare over profit.

For U.S. families, the comparison raises uncomfortable questions. If platforms can operate under strict safeguards elsewhere, why are American children left exposed to systems designed primarily to maximize engagement? The lawsuit argues this disparity undermines claims that meaningful child protection is technically impossible—it may simply be unprofitable.

A Global Reset on Age Limits—And How the U.S. Is Falling Behind

Around the world, governments are reassessing whether existing age limits for social media are meaningful—or merely symbolic. While the United States continues to rely on a patchwork of weak safeguards, several countries are moving toward higher age thresholds, mandatory parental consent, or stronger enforcement regimes that treat social media as a regulated product rather than a neutral space.

In Australia, lawmakers have advanced proposals that would bar children under 16 from using certain social media platforms altogether, citing mounting evidence of mental health harm. Though not yet fully enacted nationwide, the proposal represents one of the most aggressive stances taken by a Western democracy and signals a shift away from self-regulation by tech companies.

In France, legislation has moved toward requiring parental consent for social media use under age 15, with fines for platforms that fail to comply. French officials have framed the issue explicitly as a public health concern rather than a parenting failure.

Across the European Union, age standards already exceed those in the U.S. Under the EU’s data protection framework, member states can set the age of digital consent between 13 and 16, and several countries—including Norway—are actively debating raising that floor to 15 or higher, paired with stronger enforcement obligations for platforms.

By contrast, the United States remains anchored to a 13-year-old minimum, derived largely from the Children’s Online Privacy Protection Act (COPPA). That law focuses narrowly on data collection—not addiction, algorithmic amplification, or mental health—and enforcement has been widely criticized as inadequate. Most platforms rely on self-reported birthdays, making the age limit largely symbolic.

The growing international contrast sharpens one of the lawsuit’s core arguments: if stronger protections are feasible elsewhere, then American inaction is a policy choice—not a technical limitation. For families watching other countries move decisively while U.S. standards stagnate, the question becomes harder to ignore: why are American children afforded the weakest protections in the digital world they use the most?

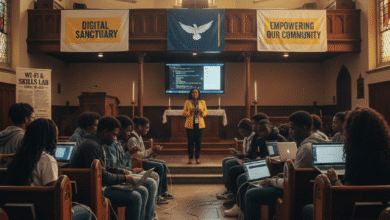

The HfYC Lens: Why This Hits Black Communities Harder

In Newark and Brooklyn, social media isn’t just entertainment—it’s connection, culture, and visibility in a world that often overlooks Black youth. But that same high engagement makes Black children especially vulnerable to exploitative algorithms, data harvesting, and predatory behavior.

When under-resourced schools default to digital platforms without robust safeguards, families shoulder the fallout alone. This lawsuit isn’t just about corporate accountability—it’s about digital sovereignty, mental health, and whether the next generation is being protected or monetized.

Key Takeaways

- Families allege social media platforms knowingly designed addictive systems for minors.

- The lawsuit frames Big Tech practices as comparable to past public health crises.

- Schools’ reliance on platforms like YouTube raises new safety concerns.

- Black communities may face heightened risk due to higher youth engagement.

HfYC Poll of the Day

Follow us and respond on social media, drop some comments on the article, or write your own perspective!

Is your child’s school doing enough to monitor the content students watch on YouTube during class hours?

- Yes, they are very proactive

- No, it’s mostly unmonitored

- I’m not sure what the policy is

- We should ban YouTube in schools entirely

Poll Question Perspectives

- Should schools rethink using commercial social media platforms for education?

- Are parents being left out of decisions about classroom technology?

- Has social media become too embedded in childhood to regulate effectively?

Related HfYC Content

- $11 PM in Newark: Safety, Freedom, and the Generation Caught in the Middle

- Brooklyn’s Digital Lifeline: How the NYC Digital Equity Roadmap 2025 Hits Home

- Dr. Cheyenne Bryant and the Hard Lessons About Black Credibility in Digital Spaces

- Has Social Media Changed Dating? Millennial Men Edition

Other Related Content

- Wall Street Journal — States and Families Accuse Social-Media Companies of Harming Children

- https://www.wsj.com/articles/social-media-lawsuits-children-harm-meta-tiktok-youtube-11697318400

- ProPublica — How Social Media Platforms Profit From Children

- https://www.propublica.org/article/social-media-children-addiction-mental-health

- The Markup — YouTube’s Algorithm Pushed Videos About Guns, Drugs, and Sex to Kids

- https://themarkup.org/2023/04/19/youtubes-algorithm-pushed-videos-about-guns-drugs-and-sex-to-kids

- New York Times — Hundreds of Families Sue Social Media Companies Over Youth Addiction

- https://www.nytimes.com/2023/10/24/technology/social-media-lawsuit-children.html

- Reuters — U.S. States, Families Press Meta, TikTok, and Others Over Child Safety

- https://www.reuters.com/technology/us-states-families-sue-meta-tiktok-youth-safety-2023-10-24/

Community Call

Has your family been impacted by social media addiction? Share your story at HereForYouCentral.com or join the conversation in our Newark and Brooklyn community groups.

Your voice is the front page.